The Timeline of Artificial Intelligence – From the 1940s to the 2025s

- July 7th, 2025 / 6 Mins read

-

Aarti Nair

Aarti Nair

The term “Artificial Intelligence” was first coined by the father of AI, John McCarthy in 1956. But the revolution of AI consequently began a few years in advance, i.e. the 1940s.

According to a survey by New Vantage Partners, 92% of businesses have given a nod of approval to AI, as Artificial Intelligence has significantly improved their operations and proved to be a good return on investment (ROI). Additionally, AI has increased involvement with a person’s day-to-day life. One can witness the inclusion of AI in smartwatches, recommendation engine on streaming platforms such as Youtube and Netflix, innovative home systems, voice assistants, messaging platforms, automated customer support, and several day-to-day business ventures, among various other platforms.

Here, we’ll discuss the course of AI over the last few decades by covering the milestones of AI and how these have shaped the world. Thus, a brief evolution of AI from the 1940s to the 2025s.

Table of Contents

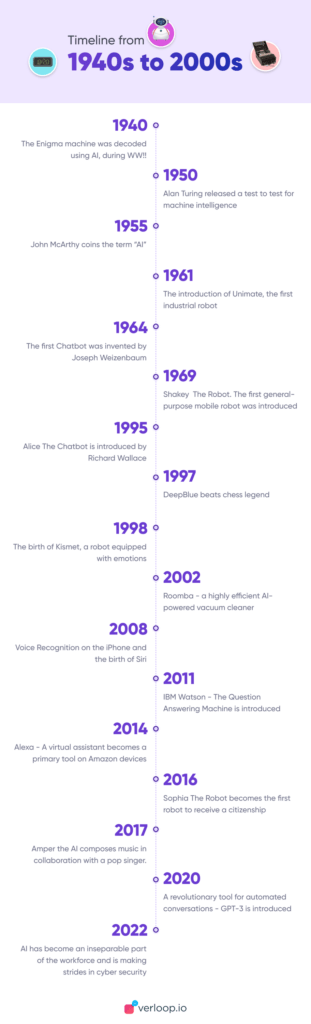

1. Enigma broken with AI (1942)

2. Test for machine intelligence by Alan Turing (1950)

3. The father of AI – John McArthy (1955)

4. The industrial robot – Unimate (1961)

5. The first chatbot – Eliza (1964)

8. Man vs Machine – DeepBlue beats chess legend (1997)

9. The emotionally equipped robot – Kismet (1998)

10. The vacuum cleaning robot – Roomba (2002)

11. Voice recognition feature on the iPhone and Siri (2008)

12. The Q/A computer system – IBM Watson (2011)

13. The pioneer of Amazon devices – Alexa (2014)

14. The first robot citizen – Sophia (2016)

15. The first AI music composer – Amper (2017)

16. A revolutionary tool for automated conversations – GPT-3 (2020)

Evolution of AI from 1900s to 2000s (2025)

AI has evolved as we humans have from its conception in the 1900s. In this blog, we give you a brief history of AI and how it has changed/

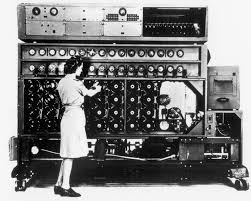

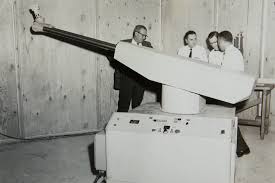

1. Enigma broken with AI (1942)

This is first up our lane in the timeline of Artificial Intelligence. The Bombe machine, designed by Alan Turing during World War II, was certainly the turning point in cracking the German communications encoded by the Enigma machine. It helped in speeding up the decoding of messages. Hence, this allowed the allies to react and strategise within a few hours itself rather than waiting for days/weeks. His entire formula of breaking the code was with the observation that each German message contained a known piece of German plaintext at a known point in the message.

2. Test for machine intelligence by Alan Turing (1950)

Alan Turing, the world’s most renowned computer scientist, and mathematician had posed yet another experiment to test for machine intelligence. The idea was to understand if the machine can think accordingly and make decisions as rationally and intelligently as a human being. In the test, an interrogator has to figure out which answer belongs to a human and which one to a machine. So, if the interrogator wouldn’t be able to distinguish between the two, the machine would pass the test of being indistinguishable from a human being.

3. The father of AI – John McArthy (1955)

John McCarthy is the highlight of our history of Artificial Intelligence. He was an American Computer Scientist, coined the term Artificial Intelligence in his proposal for the Dartmouth Conference, the first-ever AI conference held in 1956. The objective was to design a machine that would be capable of thinking and reasoning like a human. He believed that this scientific breakthrough would unquestionably happen within 5-5000 years. Furthermore, he created the Lisp computer language in 1958, which became the standard AI programming language.

4. The industrial robot – Unimate (1961)

Unimate became the first industrial robot created by George Devol. She was used on a General Motors Assembly line to transport die castings and weld these parts on autobodies. The workers had to be extremely cautious while performing this activity. Else, it could lead to poisoning or losing a limb. Unimate known for its heavy robotic arm weighed 4000 pounds.

Several replicas were made after Unimate’s success and various industrial robots were introduced.

5. The first chatbot – Eliza (1964)

The next invention marks a huge discovery amongst the timeline of Artificial Intelligence, as the market for it is still thrivin.

Eliza – the first-ever chatbot was invented in the 1960s by Joseph Wiezenbaum at the Artificial Intelligence Laboratory at MIT. Eliza is a psychotherapeutic robot that gives pre-fed responses to the users. Such that, they feel they are talking to someone who understands their problems.

Here, the main idea is that the individual would converse more and get the notion that he/she is indeed talking to a psychiatrist. Of course, with continuous development, we are now surrounded by many chatbot providers such as drift, conversica, intercom, etc.

6. Shakey – the robot (1969)

Next lined-up in this timeline of Artificial Intelligence is Shakey. Shakey is chiefly titled as the first general-purpose mobile robot. It was able to reason with its own actions. According to the plaque displayed in IEEE, Shakey “could perceive its surroundings, infer implicit facts from explicit ones, create plans, recover from errors in plan execution, and communicate using ordinary English.” The robot had a television monitor and whiskers to detect when it came close to any object. Interestingly, the robot itself would plan the route it would take so that it could carefully manoeuvre around obstacles. Shakey “communicated” with the project team with a teletype and a CRT display. Also, the team would judge how Shakey reacted to pranks.

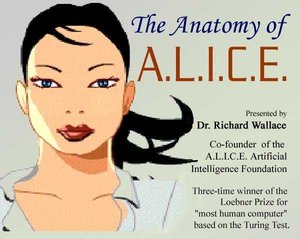

7. The chatbot ALICE (1995)

A.L.I.C.E, composed by Richard Wallace, was released worldwide on November 3rd, 1995. Although Joseph Weizenbaum’s ELIZA heavily inspired the bot, there were major tweaks to the bot that made her a genuinely exceptional. It is strengthened by NLP (Natural Language Processing), a major program that converses with humans by applying algorithmic pattern-matching rules that enables the conversation to flow more naturally.

Over the years, ALICE has won many awards and accolades, such as the Loebner Prize in three consecutive years (2000, 2001 and 2004). Additionally, ALICE has inspired the 2013 movie by Spike Jonze called Her. The movie represents a relationship between a human and an artificially intelligent bot called Samantha.

8. Man vs Machine – DeepBlue beats chess legend (1997).

This was indeed a game-changer in the timeline of Artificial Intelligence. DeepBlue was a chess-playing computer developed by IBM. It was the ultimate battle of Man Vs Machine, to figure out who outsmarts whom. Kasparov, the reigning chess legend, was challenged to beat the machine – DeepBlue. Everyone glued to the game was left aghast that DeepBlue could beat the chess champion – Garry Kasparov. This left people wondering about how machines could easily outsmart humans in a variety of tasks.

9. The Emotionally equipped robot – Kismet (1998).

Kismet (meaning: fate) was one of the first robots able to equally demonstrate social and emotional interactions with humans. It had a cartoonish face and always had the ability to engage with people and make them smile. The entire code to run and develop kismet took the developers about 2.5 years.

The motor outputs include vocalisations, facial expressions, and motor capabilities to adjust the gaze direction of the eyes and the adjustment of the head. Therefore, it portrayed a variety of emotions such as disgust, surprise, sadness, keen interest, calmness and infuriated.

10. The Vaccum Cleaning robot – Roomba (2002).

AI soon absorbed the sponge of cleaning as well.

With the introduction of Roomba, cleaning at home became much more efficient. The vacuum can trap 99% of allergens with the vacuum’s High-Efficiency Filter. So, once it finishes cleaning, you have to empty the wastebasket, and you’re good to go.

The device has a suite of sensors to avoid smashing with the furniture, fall down the stairs and so on. Not only that, but the vacuum integrates with Amazon’s Alexa and Google’s Assistant—so you can have the floors cleaned only using your voice and your preferred digital assistant.

11. Voice recognition feature on the iPhone and Siri (2008).

This advancement gave users the power to quite literally *voice* their queries and concerns.

Furthermore, the advancement was a minor feature update on an Apple iPhone, where users could use the voice recognition feature on the Google app for the first time. This step seemed small initially, but it heralded a significant breakthrough in voice bots, voice searches and Voice Assistants like Siri, Alexa and Google Home. Although highly inaccurate initially, significant updates, upgrades and improvements have made voice recognition a key feature of Artificial Intelligence.

Siri, eventually released by Apple on the iPhone only a few years later, is a testament to the success of this minor feature. In 2011, Siri was introduced as a virtual assistant and is specifically enabled to use voice queries and a natural language user interface to answer questions, make recommendations, and perform virtual actions as requested by the user.

Lastly, Siri comprises a conversational interface, personal context awareness, and service delegation. The user response to Siri has consistently been so positive that it has become a key feature on all Apple devices. A user can ask Siri to call, send a message, or perform other actions with the iPhone, Macbook, and Smart Watch apps.

With the rise of voice assistants like Siri, users can now search for and order various pharmaceutical products through simple voice commands. For instance, you can ask Siri to help find information about, a widely used medication for treating erectile dysfunction, or locate the best online deals for purchasing it. Voice assistants simplify the process of finding pharmacies that offer medications like Tadalafil—the active ingredient in Cialis—which improves blood flow and alleviates symptoms of the condition. Siri can quickly pull up websites offering prescription medications at discounted rates, provide a list of certified online pharmacies, and even assist in comparing prices for drugs. With integration into e-commerce platforms and pharmaceutical services, voice assistants make the process of purchasing medications like Cialis fast, safe, and convenient, which is especially important for those needing consistent access to healthcare solutions.

12. The Q/A computer system – IBM Watson (2011).

The famous quiz show Jeopardy resulted in the development of Watson! Watson is a question-answering computer system capable of answering questions posed in natural language. Watson helps you predict and shape future outcomes, automate complex processes, and optimise your employees’ time.

In recent years, Watson’s calibre has evolved from just being a question-answering computer system to a powerful machine learning asset to its company that can also ‘see,’ ‘hear’, ‘read,’ ‘talk,’ ‘taste,’ ‘interpret,’ ‘learn,’ and ‘recommend.’

Watson competed against champions Brad Rutter and Ken Jennings in 2011 and won the first-place prize of $1 million on the show.

13. Alexa (2014)

Next up, in the history of AI is Alexa. Alexa is a virtual assistant Artificial Intelligence system, developed by Amazon. Alexa is available on smartwatches, car monitors, speakers, TV, and various other platforms. Whenever a person says “Alexa”, the device conversely activates and performs the command. After that, it filters human voice from a room filled with commotion.

Alexa can play music, provide information, deliver news and sports scores, tell the weather condition, and control your smart home. It even enables Prime members to make lists and order products from Amazon.

14. The first robot citizen – Sophia (2016).

Hansen Robotics created Sophia, a humanoid robot with the help of Artificial Intelligence. Sophia can imitate humans’ facial expressions, language, speech skills, and opinions on pre-defined topics, and is evidently designed so that she can get smarter over time.

Sophia, activated in February 2016, and introduced to the world later that same year. Thereafter, she became a Saudi Arabian citizen, making her the first robot to achieve a country’s citizenship. Additionally, she was named the first Innovative Champion by the United Nations Development Programme.

Actress Audrey Hepburn, Egyptian Queen Nefertiti, and the wife of Sophia’s inventor are the inspiration behind Sophia’s appearance

Suggested Reading: Intents and Entities: The Building Blocks of an AI Chatbot.

15. The first AI music composer – Amper (2017).

Amper became the first artificially intelligent musician, producer and composer to create and put out an album. Additionally, Amper brings solutions to musicians by helping them express themselves through original music. Amper’s technology is built using a combination of music theory and AI innovation.

Amper marks the many one-of-a-kind collaborations between humans and technology.

For example, Amper was particularly created on account of a partnership between musicians and engineers. Identically, t he song “Break-Free’ marks the first collaboration between an actual human musician and AI. Together, Amper and the singer Taryn Southern also co-produced the music album called “I AM AI”.

16. The revolutionary tool for automated conversations – gpt-3 (2020).

GPT-3, short for Generative Pre-trained Transformer, was introduced to the world earlier in May 2020, and it is truly transforming automation.

It is undoubtedly a revolutionary tool used for automated conversations, such as responding to any text that a person types into the computer with a new piece of text that is contextually appropriate. It requires a few input texts to develop the sophisticated and accurate machine-generated text.

The subtle tweaks and nuances of languages are far too complex for machines to comprehend. Therefore, it becomes a task for them to generate texts that are easily readable by humans.

However, GPT-3 is based on natural language (NLP), deep learning, and Open AI, enabling it to create sentence patterns, not just human language text. It can also produce text summaries and perhaps even program code automatically.

Suggested Reading: 11 WhatsApp Features to Kick-Start Your WhatsApp Marketing Journey.

The Present Standing of AI (As of 2025)

From support desks to boardrooms, AI isn’t just helping—it’s redefining how work gets done.

If 2023 was the breakout year for generative AI, then 2025 is the year of maturity and integration. AI is no longer just a buzzword used by tech startups—it’s deeply embedded in everyday business operations across industries.

Let’s look at how AI, and especially Gen AI, is shaping work in 2025:

1. AI is Everywhere—And It’s Getting Smarter

According to a recent report by McKinsey, over 75% of enterprise companies have integrated at least one form of AI into their operations. Whether it’s answering customer queries, writing reports, analysing datasets, or generating sales outreach, AI is now a full-time team member.

2. Generative AI Is Reshaping Workflows

From drafting legal contracts to writing code or summarising meetings, Gen AI tools like OpenAI’s GPT-4, Anthropic’s Claude, and Google’s Gemini are:

-

Speeding up repetitive work

-

Enhancing decision-making

-

Personalising communication at scale

Work is no longer about starting from scratch. It’s about reviewing, refining, and adding value—because Gen AI handles the first draft.

💬 Customer support? Gen AI summarises chats, drafts empathetic responses, and routes complex issues.

📈 Marketing? Teams use AI to generate campaigns, segment audiences, and A/B test in real-time.

3. Voice AI Is Closing the Human Gap

Thanks to breakthroughs in speech recognition and speech synthesis, voice agents in 2025 sound almost indistinguishable from humans. They’re being used for:

-

Handling Tier-1 support queries

-

Booking appointments

-

Following up on missed calls

-

Debt collection and reminders

With speech normalisation and emotion-aware responses, these agents aren’t just reactive—they’re conversational.

4. AI Co-Pilots Are Changing How Teams Work

Every department now has a co-pilot:

-

Sales uses AI to qualify leads and personalise pitches

-

Finance uses AI to detect fraud and forecast cash flow

-

HR uses AI to screen candidates and manage onboarding

-

Support teams use AI to resolve common issues, suggest articles, and monitor sentiment

In many cases, AI doesn’t just suggest—it acts. Tools are now executing tasks on your behalf, reducing context switching and manual work.

5. AI is Becoming More Regulated and Responsible

With great power comes… audits.

Countries like the EU, India, and the US have introduced frameworks that govern:

-

Responsible use of customer data

-

Model transparency

-

Bias detection and correction

As AI becomes more embedded, governance and explainability have become boardroom conversations.

6. AI is Moving from Cost Centre to Revenue Driver

AI used to be framed as a way to cut costs. In 2025, the conversation has flipped.

Now it’s about how AI:

-

Creates better customer experiences

-

Drives higher conversions

-

Powers faster decisions

-

Unlocks new revenue streams through automation and insights

Businesses aren’t asking “Should we adopt AI?”

They’re asking “Where else can we apply it?”

What does the future of AI look like?

Artificial Intelligence (AI) is no longer just a buzzword—it’s become a cornerstone of both business and personal innovation. As we look ahead to 2034, AI is expected to permeate every aspect of life, fuelled by growing investment, technological breakthroughs, and an increasingly AI-literate society. The road to the future of AI, however, isn’t linear. It’s shaped by parallel trends: the pursuit of more powerful models, a shift towards smaller and more efficient systems, and a strong push for open, ethical, and secure AI development.

Open Source and Compact AI Models

Generative AI models like GPT-4 have demonstrated immense capabilities, but they also revealed limitations—such as high costs, resource needs, and potential opacity. This has led to a dual shift: one toward open-source collaboration, and another toward leaner models optimised for speed and efficiency.

Projects like Meta’s Llama 3.1 (with 400 billion parameters) and Mistral Large 2 are being made available for research purposes, allowing developers and enterprises to collaborate on innovation while retaining commercial use rights. Meanwhile, compact models like GPT-4o-mini (with 11 billion parameters) are becoming faster, cheaper, and more deployable—ideal for embedding directly into mobile devices or edge applications.

This change is redefining the AI landscape. Enterprises are moving away from relying solely on monolithic models. Instead, they are creating specialised, purpose-built models trained on proprietary data to meet unique business needs—whether it’s customer service, logistics, or product development.

Real-World Integration of AI Technologies

AI is now deeply embedded in core technological domains:

-

Computer vision is improving precision in autonomous vehicles and medical diagnostics.

-

Natural Language Processing (NLP) is powering intelligent chatbots, translation services, and sentiment analysis tools.

-

Predictive analytics is enabling smarter financial forecasts, marketing campaigns, and inventory management.

-

AI in robotics is simplifying tasks like assembly-line automation, medical surgeries, and package delivery.

-

IoT and AI convergence is making smart homes, cities, and industries more efficient through real-time data analysis.

Multimodal AI Will Be the New Normal

Unimodal AI systems, which interpret one form of input, like text or images, are being replaced by multimodal AI. These systems understand and generate responses using a combination of text, voice, visuals, and even gestures, mimicking the way humans communicate.

By 2034, multimodal AI will underpin virtual assistants that can respond to a query with a text reply, an infographic, or a how-to video, whichever format best serves the user. It’s an evolution that will make interactions smoother, more human-like, and more context-aware.

AI Democratisation and DIY Model Building

No-code and low-code platforms are making it easier for non-technical users—entrepreneurs, educators, researchers—to build and deploy AI models. Much like website builders revolutionised web development, these platforms will democratise AI usage.

Users will be able to use drag-and-drop components or type prompts to customise an AI model. AutoML (Automated Machine Learning) will continue to evolve, simplifying preprocessing, hyperparameter tuning, and model validation.

This is particularly valuable for small to mid-sized businesses that lack the infrastructure or talent to develop AI from scratch. These plug-and-play AI tools will fuel rapid innovation across sectors.

Strategic Business AI & The Rise of Agentic AI

AI will become a strategic partner in decision-making. In the boardroom, AI will offer predictive modelling, real-time data analysis, and intelligent suggestions for everything from financial planning to customer acquisition.

Meanwhile, Agentic AI will enable businesses to deploy autonomous agents for specific workflows—each designed to perform a specialised task, like ticket resolution or payment verification. These agents will operate independently but collaborate with LLMs to complete multistep tasks.

Imagine a telco company: an LLM categorises an incoming query, while agents check account data, diagnose service issues, and draft a solution—all in seconds. This layered model balances general intelligence with task-specific expertise.

Ethical AI, Regulations, and Trust

The proliferation of AI will bring regulatory scrutiny. The EU AI Act is leading the way by defining risk categories, mandating transparency, and banning use cases that infringe on fundamental rights (like social scoring or biometric surveillance).

Regulations will demand:

-

Human oversight

-

Explainability of AI decisions

-

High standards for cybersecurity and fairness

Businesses will be expected to implement robust governance structures to oversee how AI is used both internally and externally. This includes continuously monitoring for potential misuse, tracking malicious activities, and regularly measuring against a cyberattack to ensure security protocols remain effective and resilient.

Future Challenges: Hallucination Insurance & Shadow AI

As generative AI becomes central to business processes, the risk of misinformation—hallucinations increases. Some industries may adopt AI hallucination insurance to protect against reputational or financial losses caused by inaccurate AI outputs.

Simultaneously, the rise of shadow AI—unauthorised tools used by employees—will push companies to tighten access and implement internal data policies, ensuring only approved systems interact with sensitive information.

Quantum Computing, Bitnets, and New Architectures

The future of AI will likely intersect with innovations like:

-

Quantum computing, enabling faster, more powerful model training.

-

Bitnet models, which use a ternary system to compute more efficiently, reducing energy use and increasing speed.

-

Neuromorphic and optical computing, offering post-Moore solutions to expand AI capabilities without increasing energy requirements.

These technologies will overcome bottlenecks in AI performance and accelerate breakthroughs across scientific and business domains.

Personalised AI Everywhere

In 2034, AI will be embedded in daily life:

-

AI assistants will manage grocery shopping, create bespoke playlists, and even generate content on command.

-

Personalised learning experiences will adapt in real time to an individual’s pace and style.

-

AI in healthcare will assist with personalised diagnostics and treatment plans.

Whether for business or pleasure, AI will enable hyper-personalisation at scale.

Societal Impacts and Job Shifts

While AI boosts productivity, it will also displace some roles. Routine, repetitive jobs will shrink, but demand will rise for skills in:

-

AI auditing

-

Data governance

-

Prompt engineering

-

AI ethics and oversight

Governments and enterprises will need reskilling programs to ensure workforce adaptability.

Climate Trade-Offs

Training large models is energy-intensive. To stay sustainable, organisations must offset energy use by:

-

Optimising model size

-

Using renewable-powered data centres

-

Leveraging synthetic data for efficient training

Paradoxically, AI can also help fight climate change by improving forecasting models, energy usage patterns, and disaster prediction.

AI’s future isn’t about bigger models alone—it’s about smarter, faster, fairer, and more accessible systems. As generative AI matures, agentic AI becomes operational, and ethical frameworks solidify, we’ll move into a future where AI is not just a tool, but a collaborator.

Organisations that prepare for this future now—by investing in model explainability, governance, and open innovation—will be the ones shaping what comes next.

FAQs on Timeline of AI

1. When did AI become popular?

Artificial intelligence gained its popularity mostly between 1993 and 2011. With the increased use of AI agents in businesses, AI was also introduced to the masses in form of AI agents.

AI was also incorporated into daily life during this age of inventions, such as the first Roomba and the first Windows computer speech recognition program that was sold commercially. Further advancements were made possible by the increase in financing for research that coincided with the spike in interest.

2. How has AI evolved over time?

Artificial intelligence was first centered on precise programming and logic principles to mimic human intelligence. In the end, the 1990s big data explosion completely changed how we approach the field today.

3. Who coined the term Artificial Intelligence (AI)?

The term “artificial intelligence” was first used in a workshop that John McCarthy conducted at Dartmouth in 1955. This is also the year when the term became widely used.

4. When did the idea of Artificial Intelligence first emerge?

The roots of AI go back to ancient mythology, but scientifically, the idea began taking shape in the 1940s and 1950s. British mathematician Alan Turing laid the groundwork with his 1950 paper “Computing Machinery and Intelligence,” introducing the idea that machines could simulate human thought.

5. What was the significance of the 1956 Dartmouth Conference?

Often considered the birthplace of AI, the Dartmouth Conference (organised by John McCarthy, Marvin Minsky, Claude Shannon, and others) was where the term “artificial intelligence” was coined. It marked the formal launch of AI as a field of academic study.

6. What were the early goals of AI research in the 1960s and 70s?

Early researchers focused on symbolic AI, hoping to replicate human reasoning through logic and rules. Systems like ELIZA (a simple psychotherapist bot) and SHRDLU (which could interact with blocks in a virtual world) gained popularity in labs.

7. Why did AI funding drop in the 1970s and late 1980s?

These periods are known as “AI Winters”—times when enthusiasm and funding plummeted due to overpromises and underdelivered results. Despite big ambitions, early AI systems lacked the computing power and data to scale.

8. What changed in the 1990s to bring AI back into focus?

AI made a comeback through expert systems in businesses, and machine learning started to take root. IBM’s Deep Blue famously defeated chess world champion Garry Kasparov in 1997, signalling a major leap in applied AI.

9. How did AI evolve by the early 2000s?

The rise of big data, increased computing power, and improved algorithms brought about more practical AI applications. This era saw the beginnings of modern machine learning, spam filters, early recommendation engines, and basic natural language processing tools.

10. Was neural network research part of early AI?

Yes, neural networks were first proposed in the 1950s (e.g., the Perceptron by Frank Rosenblatt). But their limitations led to disinterest until the late 1980s and 1990s, when backpropagation and multi-layer networks started showing promise again.

11. Did AI influence other fields during its early evolution?

Absolutely. AI research heavily influenced robotics, linguistics, computer science, cognitive psychology, and philosophy. Many concepts, such as search algorithms and optimisation techniques, became foundational in computer science.

12. How did the early developments shape today’s AI?

The early years laid the theoretical and ethical foundations. Despite limited hardware and data, pioneers developed core ideas—symbolic reasoning, learning from data, and human-computer interaction—that evolved into today’s neural networks, generative AI, and intelligent agents.