Speech Normalisation – The Secret to Smarter Voice AI Calls

- March 5th, 2025 / 5 Mins read

- Harshitha Raja

Picture this.

You’re on a call with a Voice AI assistant, trying to confirm a meeting. The AI gets everything right—it knows your name, your request, even the context of your previous conversation.

Then it says: “Your meeting is scheduled for two zero two four dash zero three dash one five at one four colon three zero.”

Wait… what?

You pause. Your brain does a quick decode. March 15, 2024, at 2:30 PM.

Now, imagine this happening every time Voice AI reads out a date, a time, an email address, or a phone number.

It’s like talking to someone who knows what they’re saying but has no idea how to say it. And that’s the problem.

Voice AI has evolved past basic speech recognition. It understands words, context, and intent. But does it sound natural? If customers have to think about what AI just said, that’s a problem.

That’s exactly what Speech Normalisation fixes.

The Unspoken Problem in Voice AI Calls

Voice AI calling software has come a long way. Today’s AI agents can handle customer service calls, appointment bookings, troubleshooting, and more. But there’s one issue that still slips through the cracks:

The way AI speaks.

Not the voice. Not the tone. But the way it delivers structured data.

Think about the last time you used an AI voice assistant. Did it:

- Read an email address so fast you had to hear it twice?

- Read out a phone number like a billion-dollar stock price?

- Flip between “fourteen thirty” and “two thirty PM” for no reason?

Although these aren’t major technical flaws, but do they make conversations awkward, robotic, and frustrating?

Certainly!

These are the key challenges faced by voice AI systems without speech normalisation:

- Accuracy Issues: Without normalisation, voice AI systems struggle with understanding various accents, dialects, and speech impediments. This can lead to a higher word error rate (WER) and reduced accuracy.

- Sound Quality: The nuances in human language, such as tone and emotion, are hard to capture without normalisation. This can make the AI sound robotic and less human-like.

- Natural Language Processing (NLP) Limitations: Understanding nuances like sarcasm, humor, and idiomatic expressions is challenging. Without normalisation, these systems often struggle with complex sentence structures and multi-turn dialogues.

Speech Normalisation solves these by making Voice AI calls smoother, clearer, and more human-like.

But What is Speech Normalisation?

Speech Normalisation is the process of transforming raw, structured data into natural-sounding speech that aligns with human communication patterns.

Unlike traditional text-to-speech systems that simply read characters as they appear, Speech Normalisation interprets and formats information based on established linguistic rules. This means:

- Numbers, dates, times, phone numbers, and email addresses are spoken in a way that sounds natural to the listener.

- AI adapts its speech to match user expectations instead of using rigid, pre-programmed pronunciations.

- The output is consistent, reducing variability in how AI reads structured data across different conversations.

For example, when AI encounters:

- A date like 2024-03-15, it knows to say “March 15, 2024” instead of a literal sequence of numbers.

- A time like 14:30, it understands to say “2:30 PM” instead of “fourteen thirty.”

- An email like hello@verloop.io, it spaces out the characters properly so that users can actually note it down.

Speech Normalisation is essentially a pre-processing layer for spoken AI responses, ensuring that what AI says is not just correct, but also intuitive.

This process improves comprehension, user experience, and the overall effectiveness of AI-driven conversations.

How Speech Normalisation Works Under the Hood?

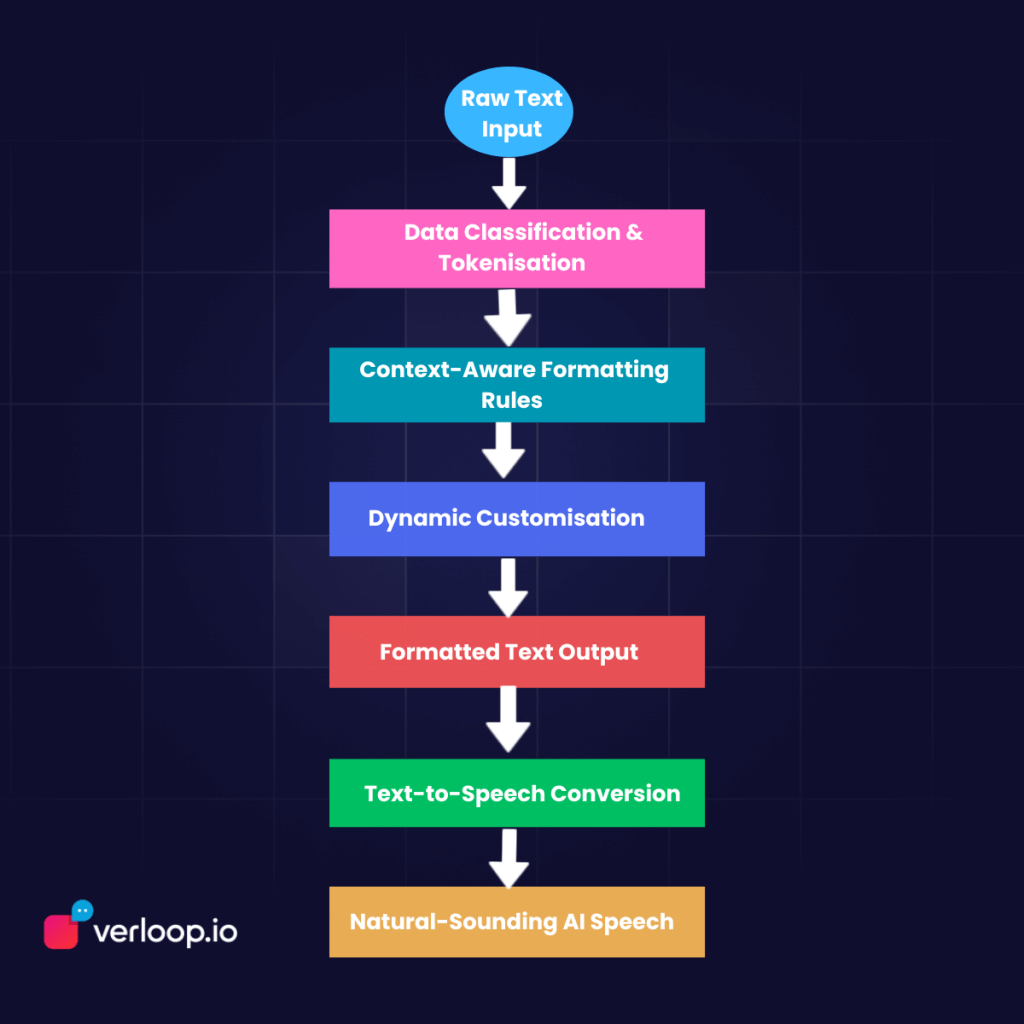

Like we just mentioned, Speech Normalisation operates as a pre-processing layer in text-to-speech (TTS) and voice synthesis systems. It transforms raw, structured data into a human-friendly format before the AI generates speech. This process involves linguistic processing, phonetic adaptation, and contextual rule application.

Here’s a technical breakdown of how it works:

1. Data Classification and Tokenisation

When a Voice AI system receives text input, it first identifies and classifies different data types within the text. The system scans for:

- Email addresses – Recognising patterns like hello@verloop.io as an email.

- Phone numbers – Detecting sequences like +1 987 654 3210.

- Dates and times – Identifying structures like 14:30 or 2024-03-15.

- URLs – Differentiating between website addresses and standard text.

- Currency values – Parsing values like $1,499.99.

Once identified, the text is tokenised—broken down into meaningful units for further processing.

2. Context-Aware Formatting Rules

After tokenisation, Speech Normalisation applies contextual rules to ensure that structured data is converted into natural, predictable speech. This process is driven by:

Phonetic Adaptation for Speech Clarity

For certain elements like email addresses, AI applies character separation and stress placement to improve readability.

- Input: hello@verloop.io

- Without normalisation: “hello at verloop dot eye-oh”

- With normalisation: “H E L L O at V E R L O O P dot I O”

This ensures the AI does not blend characters into an incomprehensible blur.

Numerical Parsing for Consistency

Numbers can be read in multiple ways depending on context. Speech Normalisation ensures consistency by applying predefined phonetic rules.

- Times: 14:30 → “Two thirty PM” (instead of “Fourteen thirty”)

- Phone Numbers: +1 987 654 3210 → “Plus one, nine eight seven, six five four, three two one zero”

- Currency: $1,499.99 → “One thousand four hundred ninety-nine dollars and ninety-nine cents”

Pattern-Based Restructuring for Dates and URLs

Some elements need to be reformatted entirely before AI reads them out.

- Dates: 2024-03-15 → “March 15, 2024”

- URLs: https://verloop.io/contact → “Verloop dot I O slash contact”

This restructuring ensures that AI output aligns with how humans naturally process and communicate information.

3. Dynamic Customisation Based on Use Case

Different industries require different levels of Speech Normalisation. The AI system allows customisation based on specific needs.

For example, a financial institution may enable strict currency formatting ($15,000 → “Fifteen thousand dollars”) while a customer support bot might prioritise phone number clarity over verbose number pronunciation.

Some AI platforms also provide real-time Speech Normalisation adjustments, allowing developers to toggle settings for speed vs. clarity optimisation.

4. Integration With Text-to-Speech (TTS) Systems

Once the structured data is formatted correctly, the final normalised text is fed into a Text-to-Speech (TTS) engine. The AI then converts this structured, human-friendly output into synthesised speech.

Most TTS engines leverage neural speech models (like Tacotron, WaveNet, or Amazon Polly) to generate a natural voice tone and rhythm. Speech Normalisation acts as a pre-step to eliminate errors before the AI ever starts speaking.

Why Speech Normalisation is a Game-Changer for Voice AI Calling Software?

Technicalities aside, why is speech normalisation the secret sauce for voice AI technology? What does it mean for your end customers?

AI Sounds Natural, Not Robotic

Customers expect AI to be fast and accurate. But accuracy alone isn’t enough. If Voice AI calls sound robotic, they feel unnatural—even if the information is correct.

Speech Normalisation helps bridge that gap between functional AI and intelligent AI by making conversations flow like real human interactions.

No More Misunderstandings

If customers can’t understand AI on the first try, they’ll either:

- Ask for a repeat (which slows everything down), or

- Get frustrated and hang up.

Both are bad for business. When AI delivers information in a natural, predictable way, calls become smoother and more efficient.

Faster Conversations, Better CX

Every second matters in Voice AI calls. If users spend extra time processing AI’s response, it increases call duration and customer frustration.

Speech Normalisation reduces cognitive load by ensuring:

- Users instantly understand what AI says.

- Conversations move faster.

- Customers leave with a better experience.

Reduces Manual Fixes and Custom Prompting

Before Speech Normalisation, businesses had to manually tweak AI prompts to make sure numbers, emails, and times sounded right.

That meant:

- Adding extra spaces between characters

- Using phonetic spelling workarounds

- Writing multiple versions of the same response

Now? AI handles it automatically. No extra work required.

A Smarter Voice AI Calling Experience for Every Industry

Different industries need different levels of speech precision.

- Banking & FinTech – Large currency values need to be read correctly.

- Customer Support – Callers need clear, fast responses.

- Healthcare – Dates, times, and numbers must be precise.

- E-commerce – Order numbers and tracking details shouldn’t confuse customers.

Speech Normalisation makes AI adaptable to industry-specific needs, improving customer interactions across the board.

Where Speech Normalisation Fits in the Future of Voice AI Calls

Voice AI calling software is already transforming customer service, telemarketing, appointment scheduling, and more. But for AI to truly replace human agents in calls, it needs more than just intelligence—it needs fluency.

Think about the best human call agents.

They don’t just deliver correct information. They deliver it in a way that’s clear, concise, and easy to understand.

Speech Normalisation ensures AI does the same.

The future of Voice AI isn’t just about understanding what customers say—it’s about responding in a way that feels effortless, familiar, and human.

And that’s the real breakthrough.

Final Thought

Your AI already understands customers. Now, it’s time to make sure customers don’t have to work to understand it.

Speech Normalisation isn’t just about fixing awkward pronunciations—it’s about making conversations effortless, where information flows the way it should. No second-guessing, no unnecessary friction.

If you’ve ever listened to your Voice AI and thought, this could sound better, it probably can.

Let’s fix that. Get in touch, and let’s make your AI sound as natural as it should.