Conversational Chatbot Security: Threats, Measures, Best Practices

Conversational Chatbot Security: Threats, Measures, Best Practices

Are you considering using a conversational chatbot for your customer support? In this post, we’ll help you understand some security risks associated with them and important countermeasures to tackle them.

From Siri to Alexa, chatbots to voicebots, devices are starting to give answers. Conversational AI services have embedded themselves across various networks, creating seamless conversations and better user experiences.

But just like any new technology, it comes with baggage – chatbot security risks.

As the first touchpoint for many of your customers, chatbots are ordained with handling sensitive information which can include user names, email IDs, phone numbers, ID numbers etc.

If not stored securely by the bot, such data becomes an easy target for hackers. Upholding the integrity of information your users trust you with here becomes vital. And to ensure rightful access, it’s important to timely address prevailing security concerns.

Here’s what you will find in the blog

Are chatbots secure? An overview of threats and vulnerabilities

4 ways to ensure chatbot security

Pass or fail – How to test your chatbot security measures

New methods to deploy stronger chatbot security

Must on chatbot security checklist: Unified chatbot management

Are chatbots secure? An overview of risks associated with chatbot security

Chatbot security risks fall into two categories, namely threats and vulnerabilities.

One-off events, such as malware and DDoS (Distributed Denial of Service) attacks, are known as threats. Many a time, targeted attacks take place on businesses, resulting in the employees getting locked out. Threats to expose consumer data are on the rise, highlighting the risks of using chatbots.

On the other hand, vulnerabilities are faults in the system that allow cybercriminals to break into it. Vulnerabilities allow threats to get inside the system, and thus they both go hand-in-hand.

They are a result of incorrect coding, weak safeguards and user errors. Besides, it is hard to design a functioning system, so making a hack-proof system is nearly impossible.

The typical development of a chatbot begins with a code, and then it is tested for cracks, which are always present. These tiny cracks go unnoticed until it is too late, but a cybersecurity professional should be able to pinpoint them beforehand.

The processes for chatbot security vulnerabilities evolve every day to ensure early detection and solution.

The specific chatbot security risks are a lot more diverse and unpredictable. Regardless, they all fall into the two categories of threats and vulnerabilities.

Threats

There are many types of threats. For example, some of the threats could be employee impersonation, ransomware and malware, phishing, whaling and repurposing of bots by hackers.

If not acted upon, threats can lead to data theft and alterations, consequently causing serious damage to your business and customers.

Let’s understand what these threats are in detail.

1. Ransomware

As the name suggests, ransomware is a form of a virus that encrypts a victim’s files and threatens to expose their data unless a ransom is paid.

2. Malware

It is software designed to cause harm to any device or server. Ransomware, for instance, is a type of malware. Other examples include Trojan Viruses, Spyware and Adware, among others.

3. Phishing

It is the fraudulent act of seeking sensitive information from people by posing as legitimate institutions or individuals. Usually, people ask for sensitive details such as personally identifiable information, banking and credit card details, etc.

4. Whaling

It is similar to phishing, but people seeking sensitive content target high-profile and senior employees within the company.

These are some common examples of threats associated with chatbots. Such threats may lead to data theft and alterations, along with impersonation of individuals and re-purposing of bots.

Vulnerabilities

Unencrypted chats and a lack of security protocols are the vulnerabilities that pave the way for threats.

Hackers may also obtain back-door access to the system through chatbots if there is an absence of HTTPS protocol. However, sometimes the issues are present in the hosting platform.

Suggested read: 5 Ways Conversational AI Makes Your Agents Life Easier

Chatbot security checklist: How to secure your chatbot to tackle threats and vulnerabilities

While all is said and done, you need to sleep well knowing you’ve done your best to ensure information security stays intact for your chatbots. So, besides the top measures you’ve taken above, what are some of the best tips that ensure your chatbot security stays pristine?

There are four ways to protect your system from chatbot security concerns. These include encryption, authentication, processes & protocols and education. Let us take a detailed look at them.

1. End-to-End encryption

We are all familiar with: “This chat is end-to-end encrypted”, likely through WhatsApp. It means that nobody other than the sender and receiver can access the conversation, ensuring your chat is secure.

Several chatbot designers have started using end-to-end encryption to increase security, and it is among the most effective methods of doing so.

The stipulation that “it is specifically required that companies take measures to de-identify and encrypt personal data” comes under the obligations of the GDPR. Therefore, end-to-end encryption is necessary to comply with GDPR requirements.

Suggested Reading: Bam! Verloop.Io Just Got Extra-Secure

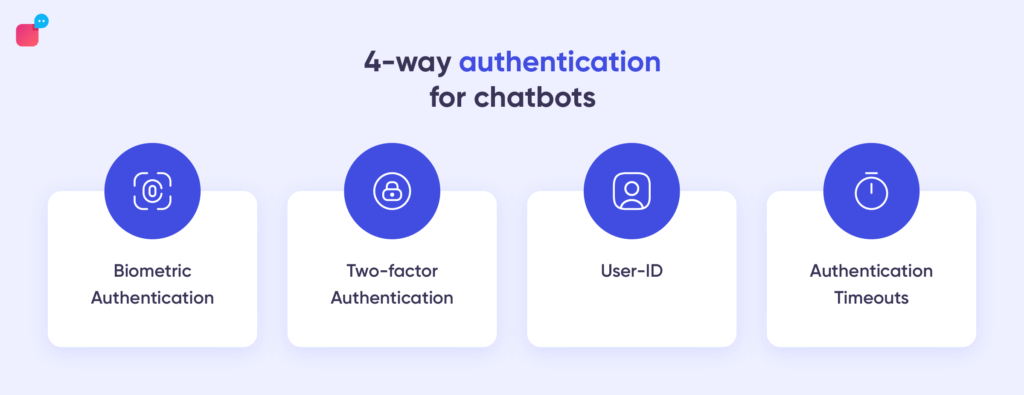

2. Authentication processes

Chatbots use two important security processes – Authentication, with which chatbots verify the user identity and authorisation, which grants the user access to information or function.

These processes ensure that the person using the device is legitimate and not fraudulent.

Specifically, authentication refers to the steps used to confirm user identity, and it’s required to grant access to any portal. These two concepts make for a robust security setup, but they have subtypes too.

a. Biometric authentication

You might have heard about “biometric attendance” in educational institutions. They are more reliable because they use fingerprints to identify people.

Biometric authentication processes use an individual’s body parts to verify identity. Software and devices have been using biometric authentication for a long time, so it is not a new method.

As of today, iris and fingerprint scans are popular means of biometric verification. We commonly see them as phone locks.

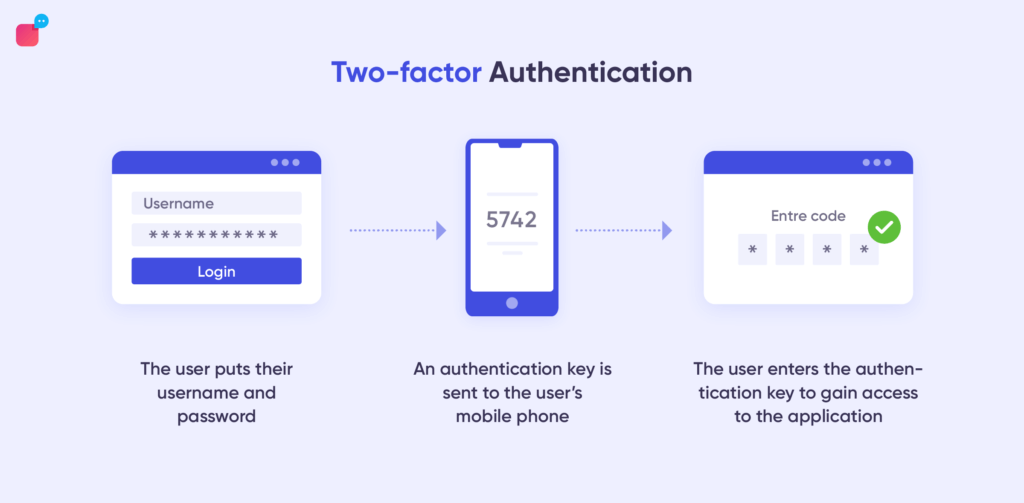

b. Two-factor authentication

This verification process is quite an old-school one but comes with the added advantage of being tried and tested. It is still in use because it works well as a method of defence.

The individual is required to confirm their identity through two different platforms. Several institutions, such as banks, use two-factor authentication.

c. User ID

The oldest method of establishing security is still relevant and will remain so in the future.

We all remember getting creative with our user IDs as children. Even now, it is the simplest yet most effective way to keep your accounts safe.

Creating a unique user ID is the most widespread method of security for most people. Hardly anything can penetrate secure login credentials along with a strong password, complete with cases, digits and symbols.

d. Authentication Timeouts

A ticking clock during the authentication process brings a greater level of security. In such a case, the validity of verification tokens is restricted to a fixed time. As the user tries to gain access, a time-sensitive code is sent to the user’s email ID/phone number. Once the token expires, the access is revoked. This practice puts a bar on repeated attempts to gain access to data.

3. Processes and Protocols

You must have noticed the “HTTPS” at the beginning of most websites because it is the default setting for a security system.

Your security teams must ensure that any data transfer should take place over HTTP and encrypted connections. As long as the Transport layer security or secure sockets layer is responsible for protecting these encrypted connections, your business need not worry about anyone breaking in.

4. Education

Human error is among the most significant causes of cybercrimes, so educating people about it is crucial. The combination of a fundamentally flawed system and naive users gives open access to hackers into the system.

The significance of eliminating cyber crimes is getting recognition in recent years, but customers and employees are still the most prone to error. A security issue will persist unless everyone is educated about how to use conversational chatbots securely.

An effective chatbot security strategy should include training workshops for crucial topics by IT experts. It increases the skill set of your employees. Moreover, it fosters the confidence of your customers in your chatbot security system.

Even though you cannot train a customer, you can still provide a roadmap or instructions about navigating your systems to avoid any issues.

5. Other methods

Below we discuss some other methods that you can consider.

A. Self-erasing messages

This feature is quite self-explanatory, and it’s quite popular on several platforms, such as Snapchat and WhatsApp. Sometime after the conversation ends, the messages erase themselves automatically. Additionally, no one can recover such messages.

B. Web Application Firewall (WAF)

A WAF protects your chatbot by blocking malicious addresses. This ensures malicious traffic and harmful requests don’t get through to your chatbot and change its code.

How to test your chatbot security measures?

The best way to test your systems mechanisms is by hiring experienced designers and security specialists to do a test run and offer suggestions. Also, you can use the following methods to check the reliability of your conversational AI chatbot security system.

1. Penetration testing

This method will help you detect vulnerabilities in your system, and you may know it by the term “ethical hacking”. It is an upcoming field in the IT industry, where cybersecurity professionals or automated software do an audit.

2. API security testing

The application programming interface (API) of your system should undergo testing to weed out any vulnerabilities. Even though there are several tools to do so, you should consider hiring a security specialist because they have the latest software, and knowledge and can find subtle vulnerabilities.

3. Comprehensive UX testing

A good design translates to a good user experience, and there is no better way to judge a system other than its user experience. While testing your chatbot, pay attention to factors such as your expectations, chatbot engagement and surface faults.

New methods for strong chatbot security

As one could guess, new methods of establishing chatbot security have emerged, making the online world safer. They are playing a crucial role in protecting chatbots against threats and detecting vulnerabilities.

Behavioural analytics and improved AI are the topmost effective among the said techniques.

1. User Behavioural Analytics (UBA)

UBA uses processes that can study human behaviour. With the help of statistics and algorithms, these programs can predict abnormal behaviour.

Such behaviour could indicate a security threat, and detecting it at the right time prevents a cybersecurity crime from taking place. UBA will eventually become a powerful tool in chatbot security systems as technology advances.

2. Improvements in AI

In the virtual world, artificial intelligence is both a boon and a curse. It is used for breaking into as well as defending systems. However, with the continual development in this sector, artificial intelligence will be leveraged for increasing cybersecurity.

Suggested Reading: How to Use AI Chatbots for Fraud Detection

Without a doubt, AI can add a security layer that overtakes the current measures, thanks to its ability to study big chunks of data for sorting abnormalities, and detecting threats and vulnerabilities.

Must on chatbot security framework: Unified space for bot management

Chatbot activity must be carefully monitored at all times. Access to your chatbot controls should be authorised to the right personnel. A central space to manage your bot activity should be in a place where you can oversee – incoming tickets handled by the chatbot, relayed information tracking, chat hand-offs, agents assigned to chats etc. A transparent oversight mechanism ensures better command over the data your chatbot handles.

A powerful chatbot builder allows you to set different authorisation levels so you know precisely who is authorised to practice controls. For example, Verloop.io’s AI automation only allows administrators the ability to make changes to bot recipes and to assign tickets to agents. This ensures that the key control rests with authorised personnel.

FAQs on chatbot security

1. Can chatbots be hacked?

No system is hack-proof and if your chatbot is not secured for vulnerabilities, it can be hacked.

2. How will customer information in the chatbot be secured to protect the privacy of the customer?

Customer information in the chatbot can be secured with end-to-end encryption. This way, not everyone has access to customer data. This data can further be secured with two-factor authentication (2FA) and access is given to only certain employees of the company.

3. What is a chatbot application?

Chatbots are computer programs designed to help humans interact with machines and find answers to common questions. AI-powered chatbots are more advanced and can have two-way conversations with humans in natural language.

4. How to tackle the risks of conversational AI chatbot security?

You can overcome the security risks associated with conversational AI chatbots by implementing processes and protocols such as end-to-end encryption, 2F authentication, installing malware, following regional data regulation laws, educating employees and users about various threats etc.

Conclusion

Improvements in chatbot security measures will be countered by advanced means to threaten it, similar to other areas in the IT sector. However, this speculation only will make way for more advancements in the field.

Chatbot technology is no longer a new thing for the masses because it is widespread enough for specialists to understand its weakness and counter-measures.

Chatbot security specialists are the only people who can provide quality education on this subject. However, this walkthrough should give an idea about the processes used to ensure cyber safety.

Verloop.io ensures your and your customers’ data is safe, and interactions are secure. Talk to our team to understand our security measures in detail.